Intro to causal inference

Good and bad controls

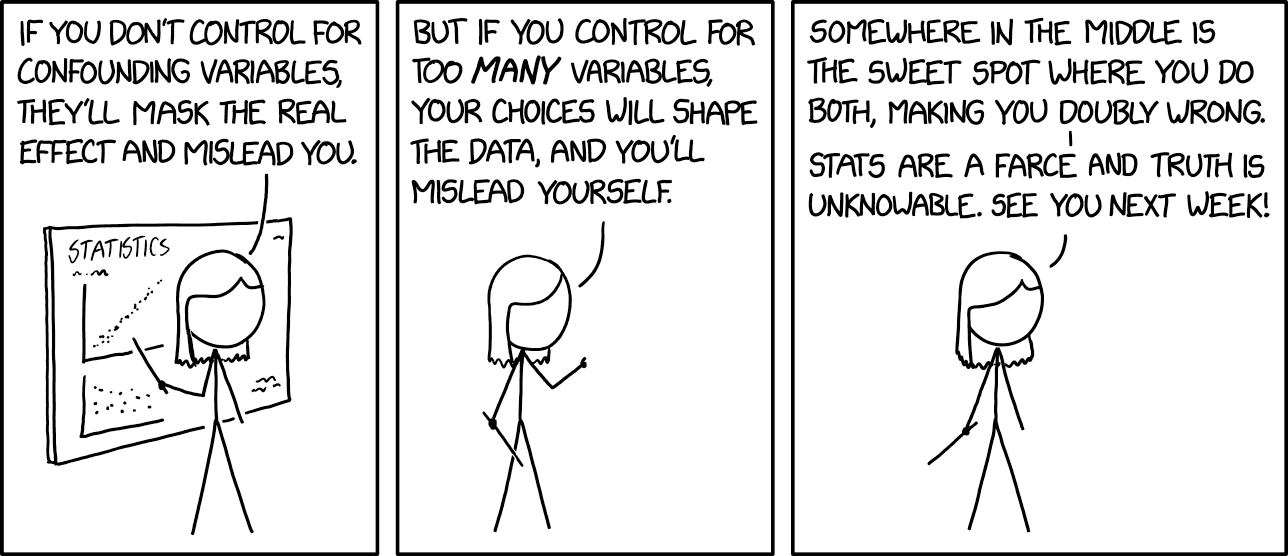

Researchers have long understood that adding variables (“controls”) to a regression model is necessary to eliminate “omitted variable bias”. Scientists exposed only to this view may get the impression that adding “more controls” to a regression model is always better. However some variables when added to the regression equation can produce unintended discrepancies between the regression coefficient and the effect that the coefficient is expected to represent. Such variables have become known as “bad controls”. This tutorial describes graphical tools for understanding, visualizing, and resolving the problem of selecting variables for inclusion in a regression model through a series of illustrative simulations in R. An applied example will be provided on the role of intergenerational transfer to determine whether grandparents directly affect the wealth, education, status of their grandchildren.

Intro

I am Richard Morris.

www.sydney.edu.au/medicine-health/about/our-people/academic-staff/richard-morris.html

…

Background

\[

\begin{align}

y =& \ bx \ + \ e \\

b =& \ cov(x, y) / cov(x, x) \\

\end{align}

\]

\(b\) is unbiased when:

\[ \begin{align} cov(x, e) = 0 \\ \end{align} \]

Background

# Simulating data:

N <- 200 # number of obs./subjects

X <- rnorm(N)

Y <- rnorm(N, 1 - X) # Y is dependent on X

summary(lm(Y ~ X))

Call:

lm(formula = Y ~ X)

Residuals:

Min 1Q Median 3Q Max

-2.5466 -0.7045 -0.0519 0.6637 3.2969

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 0.97786 0.07065 13.84 <2e-16 ***

X -0.99604 0.07318 -13.61 <2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 0.9988 on 198 degrees of freedom

Multiple R-squared: 0.4834, Adjusted R-squared: 0.4808

F-statistic: 185.3 on 1 and 198 DF, p-value: < 2.2e-16Forks (confounds)

\[ \begin{align} y =& \ b_1x \ + \ b_2z \ + \ e \\ b_1 =& \ cov(x, y | z) / var(x | z) \end{align} \]

Forks (confounds)

N <- 200 # number of obs./subjects

Z <- rnorm(N)

X <- rnorm(N, 1 + Z) # X depends on Z

Y <- rnorm(N, 1 + 0*X + 2*Z) # Y is dependent on Z, not X

summary(lm(Y ~ X)) # estimate of X should be 0

Call:

lm(formula = Y ~ X)

Residuals:

Min 1Q Median 3Q Max

-4.1195 -0.9933 -0.0924 1.1107 5.4105

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) -0.09482 0.15379 -0.617 0.538

X 0.99664 0.09470 10.524 <2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 1.768 on 198 degrees of freedom

Multiple R-squared: 0.3587, Adjusted R-squared: 0.3555

F-statistic: 110.8 on 1 and 198 DF, p-value: < 2.2e-16Forks (confounds)

Call:

lm(formula = Y ~ X + Z)

Residuals:

Min 1Q Median 3Q Max

-2.46314 -0.64511 0.03695 0.60648 2.08276

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 1.01843 0.09422 10.809 <2e-16 ***

X -0.03631 0.06715 -0.541 0.589

Z 1.99847 0.08742 22.862 <2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 0.9276 on 197 degrees of freedom

Multiple R-squared: 0.8245, Adjusted R-squared: 0.8227

F-statistic: 462.6 on 2 and 197 DF, p-value: < 2.2e-16Problem: Unmeasured confounds

N <- 200 # number of obs./subjects

U <- rnorm(N)

X <- rnorm(N, 1*U) # X depends on U

Y <- rnorm(N, 1*X + 2*U) # Y depends on X and U

summary(lm(Y ~ X)) # estimate of X should be 1

Call:

lm(formula = Y ~ X)

Residuals:

Min 1Q Median 3Q Max

-3.4101 -1.2809 -0.0926 1.1634 5.2391

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 0.01629 0.12133 0.134 0.893

X 2.01297 0.08348 24.112 <2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 1.715 on 198 degrees of freedom

Multiple R-squared: 0.746, Adjusted R-squared: 0.7447

F-statistic: 581.4 on 1 and 198 DF, p-value: < 2.2e-16Colliders

X <- rnorm(N)

Y <- rnorm(N) # Y is independent of X

Z <- rnorm(N, 2*X + 1*Y) # Z depends on X and Y

summary(lm(Y ~ X + Z)) # estimate of X should be 0

Call:

lm(formula = Y ~ X + Z)

Residuals:

Min 1Q Median 3Q Max

-1.81241 -0.42887 -0.01173 0.42913 2.26573

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 0.02104 0.04885 0.431 0.667

X -0.98516 0.08510 -11.577 <2e-16 ***

Z 0.48411 0.03492 13.864 <2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

Residual standard error: 0.6895 on 197 degrees of freedom

Multiple R-squared: 0.494, Adjusted R-squared: 0.4889

F-statistic: 96.18 on 2 and 197 DF, p-value: < 2.2e-16Problem: Measured confounds

X <- rnorm(N)

Y <- rnorm(N) # Y is independent of X

Z <- rnorm(N, 2*X + 1*Y) # Z depends on X and Y

compare(

lm(Y ~ X + Z),

lm(Y ~ X)

) Coefficients:

Estimate Std. Error t value Pr(>|t|)

X (+ Z) -0.972343 0.087097 -11.1639 <2e-16 ***

X -0.107300 0.075666 -1.4181 0.1577

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Prevailing wisdom

- Condition on all pre-treatment predictors of the treatment assignment (e.g., propensity score logic)

- All post-treatment variables are “bad controls”

Mediators

X <- rnorm(N)

Z <- rnorm(N, 1*X) # Z depends on X

Y <- rnorm(N, 1 + 1*Z) # Y depends on Z

compare(

lm(Y ~ X + Z), # Conditional effect of X = 0

lm(Y ~ X) # Total effect of X = 1 (via Z)

)Coefficients:

Estimate Std. Error t value Pr(>|t|)

X (+ Z) 0.083811 0.109865 0.7629 0.4465

X 1.149480 0.099836 11.5137 <2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Mediators II

set.seed(123)

X <- rnorm(N)

Z <- rnorm(N)

M <- rnorm(N, .5*X + 3*Z) # M depends on X and Z

Y <- rnorm(N, 1 + 2*M) # Y depends on M

compare(

lm(Y ~ X + Z), # Z improves precision

lm(Y ~ X)

)Coefficients:

Estimate Std. Error t value Pr(>|t|)

X (+ Z) 0.88714 0.16708 5.3098 2.947e-07 ***

X 0.71220 0.47851 1.4884 0.1382

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Bias Amplification

U <- rnorm(N)

Z <- rnorm(N)

X <- rnorm(N, 1*Z + 2*U) # X depends on U and Z

Y <- rnorm(N, 0*X + 2*U) # Y depends on U, not X

compare(

lm(Y ~ X + Z), # True effect of X = 0

lm(Y ~ X)

) Coefficients:

Estimate Std. Error t value Pr(>|t|)

X (+ Z) 0.778428 0.037786 20.601 < 2.2e-16 ***

X 0.659921 0.040536 16.280 < 2.2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Instruments

x <- predict(lm(X ~ Z)) # get predicted values of X

summary(lm(Y ~ x)) # matches true effect of X = 0

Call:

lm(formula = Y ~ x)

Residuals:

Min 1Q Median 3Q Max

-5.7985 -1.3678 -0.1371 1.4928 6.6315

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 0.14341 0.16169 0.887 0.376

x -0.05111 0.16397 -0.312 0.756

Residual standard error: 2.276 on 198 degrees of freedom

Multiple R-squared: 0.0004905, Adjusted R-squared: -0.004557

F-statistic: 0.09718 on 1 and 198 DF, p-value: 0.7556Example: Intergenerational transfer

https://academic.oup.com/esr/article/34/6/603/5094485

Example: Intergenerational transfer

Example: Intergenerational transfer

U <- rnorm(N) # Shared parent-child influences

X <- rnorm(N) # Grandparents

Z <- rnorm(N, 2*X + 2*U) # Parents

Y <- rnorm(N, 1 + X + Z + 2*U) # Direct effect of X = 1

compare(

lm(Y ~ X + Z),

lm(Y ~ X)

) Coefficients:

Estimate Std. Error t value Pr(>|t|)

X (+ Z) -0.66469 0.11966 -5.5548 8.93e-08 ***

X 3.15471 0.29039 10.8638 < 2.2e-16 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Conclusions

In general:

- Parents of X, which are not necessary for identification (see Instruments), are harmful for precision

- Parents of Y which do not spoil identification are beneficial for precision

https://www.dagitty.net/dags.html

…